Validity of the Inclusivity Index

The motivation for the Inclusivity Index grew out of a diversity discussion group observation that, “you can’t measure diversity.” This belief reflected frustration with existing surveys that used agree-disagree scales called Likert Ratings to gauge student opinions for broad and flexible lists of questions that often merge diversity themes with separate inquiries. Measuring inclusivity requires an objective survey that is neutral and robust and specifically minimizes sampling and cross-cultural biases. In contrast, the Likert Ratings technique produced tightly clustered results that were time-consuming and difficult to interpret. These concerns triggered a search for a better survey, one that would provide neutrality, higher fidelity measurements, greater resolution, is bounded and focused on inclusivity, and is repeatable.

Assessing inclusivity in a school community is a psychometric measurement and thus, the concepts of psychometric viability and reliability were fundamental to the search. Developing the Inclusivity Index required defining a comprehensive set of quality and demographic dimensions to measure and choosing a research methodology. The search considered student age, survey time, potential for sample bias, and anonymity demands as well as the challenge to administer the survey. Further, the data needed to be easy to convert, present and analyze and the survey had to be amenable to being repeating annually.

MaxDiff v Likert Ratings and Psychometric Validity

The choice of MaxDiff as a replacement for Likert scaling was critical in creating the Inclusivity Index. This decision strongly weighed the ability to achieve psychometric validity. The research considered approaches such as conjoint and MaxDiff and compared both to Likert Ratings surveys. While both conjoint and MaxDiff were appealing, MaxDiff allowed a larger number of qualities to be measured and imposed less of an intellectual load on the students. Equally important, we learned that MaxDiff outperformed Likert Ratings in parallel psychometric validity tests and could actually exceed the threshold of psychometric reliability, a criteria the Likert ratings technique failed to achieve.

A test comparing the MaxDiff and Likert Ratings techniques illustrates this by using both approaches to assess psychological profiles for the Five-Factor Model for personality traits. This test largely parallels the Inclusivity Index respondents, objectives and design. As Exhibit 1 portrays, the exercise composed four statements for each for the five traits. The resulting 20 statements were identically presented in two surveys, one MaxDiff and the other Likert Ratings. In the exercise, 729 respondents chose, “how accurately each item describes them.”

Exhibit 1

Building on the alternative datasets produced in the survey, a multiple trait-multiple method correlation matrix verified that the two approaches distinctly measure the five individual traits. Exhibit 2 shows that the correlation coefficients for the same traits, those five shaded in yellow, are significantly higher than the 20 other unshaded correlations that compare dissimilar traits. These five large value correlation coefficients verify that both techniques measure the personality traits.

Exhibit 3 advances the assessment by calculating Cronbach Alphas for each technique. Cronbach’s Alpha assesses internal consistency within a group of measurements. In this exercise there are 5 factors (Openness, Conscientiousness, Extraversion, Agreeableness and Neuroticism) being measured, each with 4 different items that are the same in both survey techniques. A high Cronbach Alpha means that there is a high level of reliability that the four items are measuring the same factor. The Cronbach Alpha test requires a minimum level of 0.70 to be considered as potentially reliable and at least 0.80 to be assured as reliable. As this table shows, all five traits in the MaxDiff exceed the 0.80 standard while only one of the Likert Rating results reaches the above 0.80 threshold. In each case the MaxDiff produces larger Cronbach Alphas than Likert Ratings and all are near or above 0.90. This work assures us that MaxDiff outperforms Likert Rating in an absolute sense and produces results sufficient to decree the technique reliable as it relates ti the Psychometric Validity concept.

Lessons from Existing Surveys Inspire the Pos-Neg Most- Least Choice MaxDiff Construct

Next, the work studied the existing survey experiences and contrasted the technique to potential ways to apply the MaxDiff technique. This exercise developed eight criteria to consider in that design and ultimately to use in judging the alternative approaches. The criteria were:

1. Positivity bias

2. Cultural interpretation differences

3. Number of decisions per quality across the complete survey

4. Results range:

a. Score mode % — the highest percentage for a single score

b. Breadth of scores

5. Interpretation: can we facilitate access to data for hypothesis testing vs. summary interpretations

6. Faculty alignment survey as another source of insight and comparison

7. Number of survey topics in researchable in 15-minute survey

8. Reliability and the ability to achieve psychometric validity

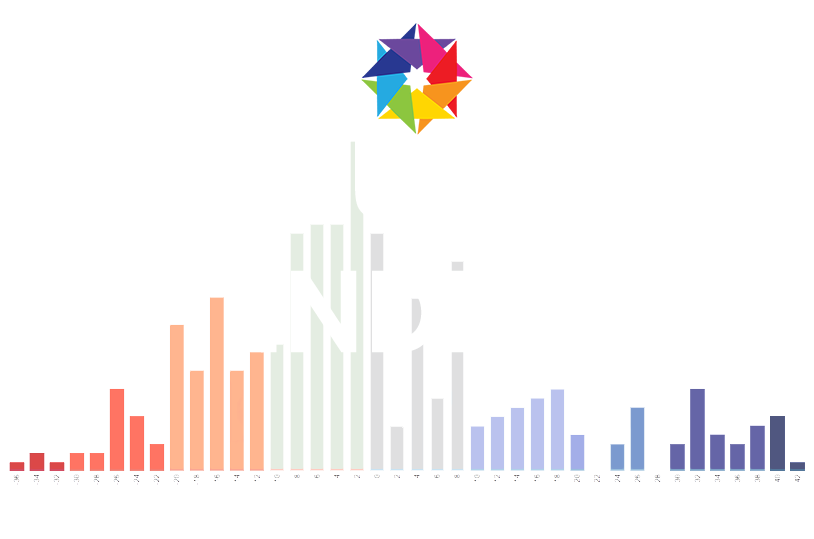

With these elements in mind, the Inclusivity Index team invented the “Positive-Negative Most-Least” approach in the MaxDiff form. We piloted the survey and next used the criteria to contrast the invented technique to results from past surveys using Likert Ratings. For example, relative to Criteria #4, the graph on the right (Exhibit 4) compares the range of results for the two techniques. For the “agree-disagree scale” approach with a 5-level scale typically 45%+ of the feedback clustered at single “agree” level of +1. Nearly 90% of the feedback falls between 3 levels, 0,1 to 2. In contrast, the actual Inclusivity Index results spread the feedback for each quality assessed over 6 levels with the mode being 22%, less than half that of the agree-disagree technique.

The table on the right, Exhibit 5, compares the “Likert Ratings” technique to the Inclusivity Index’s construct with the preferable solution for all the criteria with the preferred method shaded in green. As shown the “Positive-Negative Most-Least” is the preferred method on seven of the eight criteria.

Exhibit 2

Exhibit 3

Exhibit 4

Exhibit 5

Exhibit 6

Exhibit 7

Satisfaction and Impact Are the Ultimate Test of Validity

The ultimate validation of the Inclusivity Index is its ability to improve schools. Through the first six years of use, schools repeating the survey improved overall scores in each successive year and most important, a reduction in the number of students reporting a negative experience. Since this precisely matches the aim of the schools embarking on this journey, it proves the worth and effectiveness of the tool. In the end, the combined effort of driven school administrators and the Inclusivity Index makes the life of the a school’s students both better and more complete. Most important, it underpins the experience of building on differences of those around you and demonstrates to students in their formative years, as well as faculty and staff, the joy of fulfillment in belonging to and enriching an inclusive community.

Developing Tested and Reliable Survey Content

Beyond survey design, the validity of the Inclusivity Index depends on thoughtful and comprehensive content tailored to age. The Inclusivity Index content was derived from two recognized and reliable higher education surveys led by Sylvia Hurtado of the UCLA Higher Education Research Center. These studies included one applied in California entitled “Diverse Learning Environments: Assessing and Creating Conditions for Student Success” and another at Cornell, the “Cornell Quality Study of Diversity: Student Experiences.” The choices on attributes for the Inclusivity Index were motivated by the dimensions used in these studies as outlined in the graphic on the right, Exhibit 8.

Two qualities were added independent of the 19 derived from Hurtado’s work, Stress and Trauma. Content development for the Grades 4 to 8 and K to 4 surveys followed a similar methodology beginning with this list of 21 qualities and either eliminating or modifying each to match age/development stage. Additional research by separate committees for both surveys added new qualities as follows:

Meeting the MaxDiff Guidelines for Statistical Significance

Finally, the MaxDiff survey experts had over 20+ years developed a separate set of minimum criteria for this form of survey to ensure statistical significance. The relevant guidelines are detailed in the table on the right (Exhibit 7). This color-coded table indicates in green that in a typical school application, the Inclusivity Index survey meets or exceeds these guidelines.